Dries Verbiest

Head of IT Architecture

Re-imagining Our Foundations

The growing demand for personalised and digitised services amplifies the technological complexity many IT departments face. Our IT team isn't exempt from that. We've struggled to meet our clients' and business units' expectations. The ever-evolving digitalisation of our services requires us to use a wider variety of technologies to build more applications than ever.

Alas, our existing architecture isn't equipped for this diversity of applications. We designed it years ago to host a handful of robust macro services, not the multitude of microservices we need today. We still need to install each application and its dependencies manually on every server, making developing and scaling an application time-consuming, complex and prone to conflicts.

Deployment is equally complex and rigid. Despite extensive testing, we’re never 100% certain that an application will function without hiccups in all its intended environments. On top of that, we can't swiftly revert to a previous version, roll back an application in a particular environment, or quickly find and fix issues. Instead, we need to remove, rebuild and re-deploy the application.

Laying the architectural groundwork

We knew we had to overhaul our architecture to become the digitalised and data-driven company we need to be.

Cue containers.

Containers are best described as lightweight virtual machine packages that contain all the necessary components, or layers, required to build and deploy an application. They operate as virtual counterparts to physical containers and consist of 3 layers:

- an operation system layer that contains information about the underlying operating system

- the application framework layer with all its dependencies.

- the application itself.

After careful consideration, we embarked on this journey with Alpine Linux OS containers, supported by Microsoft. Linux containers provide more features, excel in resource efficiency, and set the market standard.

Demystifying container operation

If the r/container subreddit is any indication, many IT professionals find container operation complex. The basic mechanisms are, however, straightforward.

Each container mirrors a blueprint of the container, called the 'app image' or 'image'. That image is saved and stored in a central registry; we chose the Azure Container Registry (ACR). When building an application, an image is first created within and then retrieved from the ACR and downloaded onto the Docker engine. The server will then extract the entirety or specific parts of the image's content.

Docker is a platform-as-a-service that leverages OS virtualisation to host and transport containers and facilitates application building and deployment. Think of it as a hosting layer on top of the server that eliminates the need for direct application configuration on servers. Instead, applications and their dependencies are packaged into containers and managed and executed by Docker. In doing so, containers enhance the infrastructure-agnosticism of application development and deployment, ensuring consistent configurations across all servers.

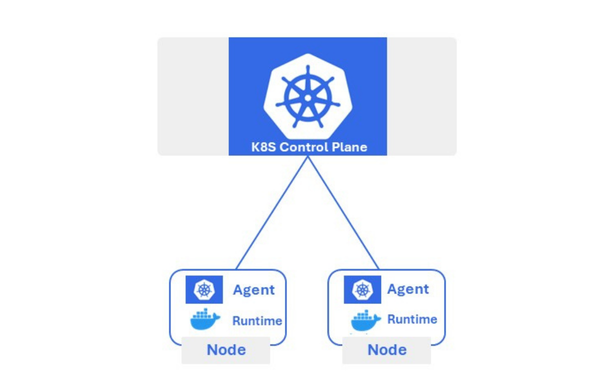

We adopted Azure Kubernetes Service (AKS) as our orchestrator tool to manage the resources of these applications and containers efficiently. AKS ensures that its intended end-user can access each application within its container. It automates container deployment on suitable servers based on specific environmental requirements, CPU, and runtime.

Kubernetes: orchestrating container harmony

Kubernetes relies on various resources. It initiates orchestration by grouping containers into 'Pods'. These fundamental resources act as building blocks, housing one or more containers and ensuring that containers share resources and collaborate efficiently.

The orchestrator tool then scales these Pods using 'ReplicaSets'. ReplicaSets manages the availability of the requisite number of Pods and applications, which is vital for handling increased demand and replacing failing Pods without disruption.

The 'Services' resource acts as traffic directors, ensuring constant communication and load distribution between Pods. Lastly, the 'Storage Layer' provides data storage and maintains data integrity even when Pods restart or scale.

Kubernetes: The Key Principles

- Open source K8S (Kubernetes) created by Google

- Orchestrator to manage containers on multiple servers: Scaling, DeploymentStart/Stop/Delete

- Principle based on two kinds of nodes

- Master node (control plane)

- Worker nodes on the on-premise infrastructure

- Provide elasticity on the on-premise infrstructure

- In the current hosting solutions, all applications are deployed on all servers

- An application, even if not used, consumes a minimum of memory

Reaping the benefits

The most significant advantage lies in the speed and flexibility we can update, develop and deploy new applications. There's no longer need manual implementation and customisation of applications for each server and environment.

Our new architecture isolates applications and guarantees the availability of a minimal number of container instances. This approach saves considerable time and resources, especially when finetuning applications, initiating a replacement in case of a container instance failure or addressing performance issues in specific environments.

It also empowers us to employ advanced release strategies like rolling updates or blue/green deployments, minimising or eliminating potential downtime during releases.

Furthermore, testing has gotten a lot easier. The containers' portability across environments enables OS and application code testing in various settings and allows us to implement swift exit plans.

Finally, we benefit from the containers' efficiency. They only utilise the necessary resources and share the host OS kernel, which minimises overhead compared to traditional virtual machines.

Conclusion

Our clients' ever-evolving digital demands led us to embrace container technology, Kubernetes orchestration, and an array of essential resources. This new, composable architecture positions us to build, adjust and deploy applications efficiently. On top of that, we're now better equipped to keep up with and leverage outside technologies to our clients' benefit.

One of the ways we aim to do that is by allowing our new architecture to connect with outside technologies. We're making leaps on that front and will soon publish our experiences. In the meantime, please read our other articles below and discover other projects that help provide digital microservices.