Clients dictate everything. As our insurance clientele has become more digitally proficient, businesses, including ours, must leverage data to offer personalised digital products and streamline operations. However, our on-premises data platform became a bottleneck. Unable to efficiently handle the vast amounts of structured and unstructured data, we opted to transition to a cloud-based environment. Here's how that went.

Patrick Sergysels

Head of Data Management

Chief Information Security Officer

Changing client demands

As our clients' demands evolve, so must AG Insurance. They expect on-demand, personalised and frictionless digital microservices, especially during high-pressure moments. We've committed ourselves to meeting those demands.

But to do so, we need a profound understanding of our clients' needs. The key to that is a vast amount of structured and unstructured customer data that all departments should have at the ready.

Infrastructure in need of a revamp

That's where our technological shoe pinched. An internal survey and gap assessment with our business colleagues revealed that we lacked the means to handle the required data, impeding us from developing more complex use cases.

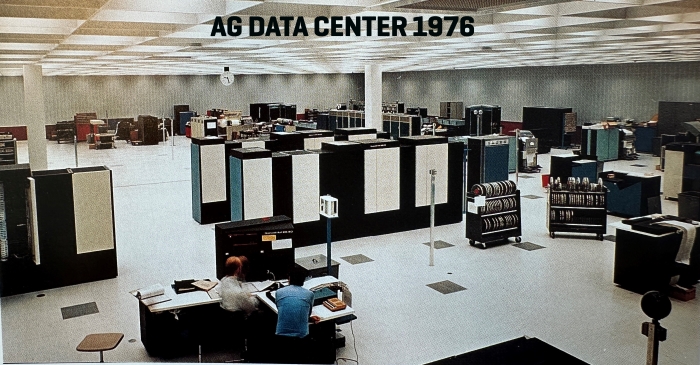

Our on-prem data platform was designed to serve as a data warehouse and to refresh data monthly. Throughout the years, we scaled our platform to support daily updates, but it remained unfit for advanced real-time analytics.

We needed more scalability and elasticity to handle the amount and the type of data we wanted to harvest to provide those services. The data platform didn't support unstructured data like videos, images, weather data, etc., significantly limiting the scope of future services. On top of that, we couldn't implement automation processes, nor could we execute reasonably simple tasks, like producing basic customer information reports on time.

The fact that we still used decade-old datasets technologies illustrates how desperately we needed to revamp our architecture and technology to align with our ambitions and needs. We decided that cloud technology would give us the best opportunity to modernise our core systems and provide scalability and elasticity.

Relying on trusted partners

Given this project's magnitude, we needed assistance from technology leaders. We chose Microsoft as our target system to build our new data platform for various reasons. AG Insurance already relies on technologies in Microsoft's data stack and on-premises data warehouse functionalities such as Power BI, SSAS and SQL-Server.

More importantly, we were enthralled by Microsoft's vision and willingness to invest in developing their products. A small pilot using dummy files confirmed our belief that Microsoft was the right target. It also allowed us to reassess our technological needs, finetune our resource requirements and budget the possible cost of this operation.

Our second partner is Hexaware. Hexaware already handled the development and maintenance of our on-premises data platform and has demonstrated its expertise and reliability over the years. Our partners recreated the data structure, rewrote the pipeline, and transferred the entire data history from our on-prem data environment to the new cloud platform.

How we built our new platform

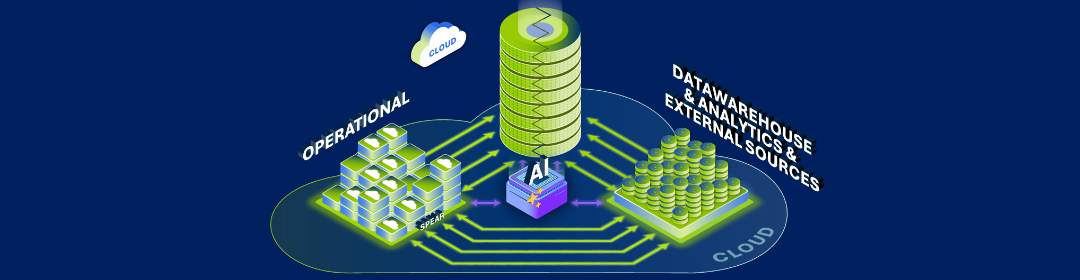

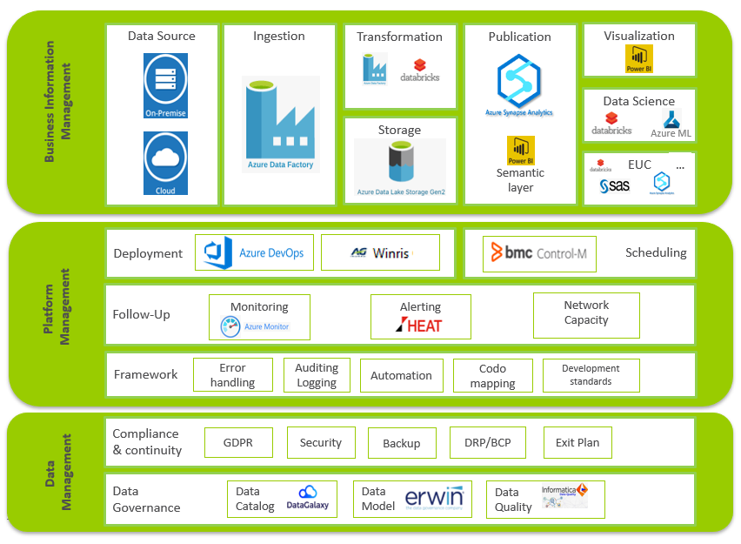

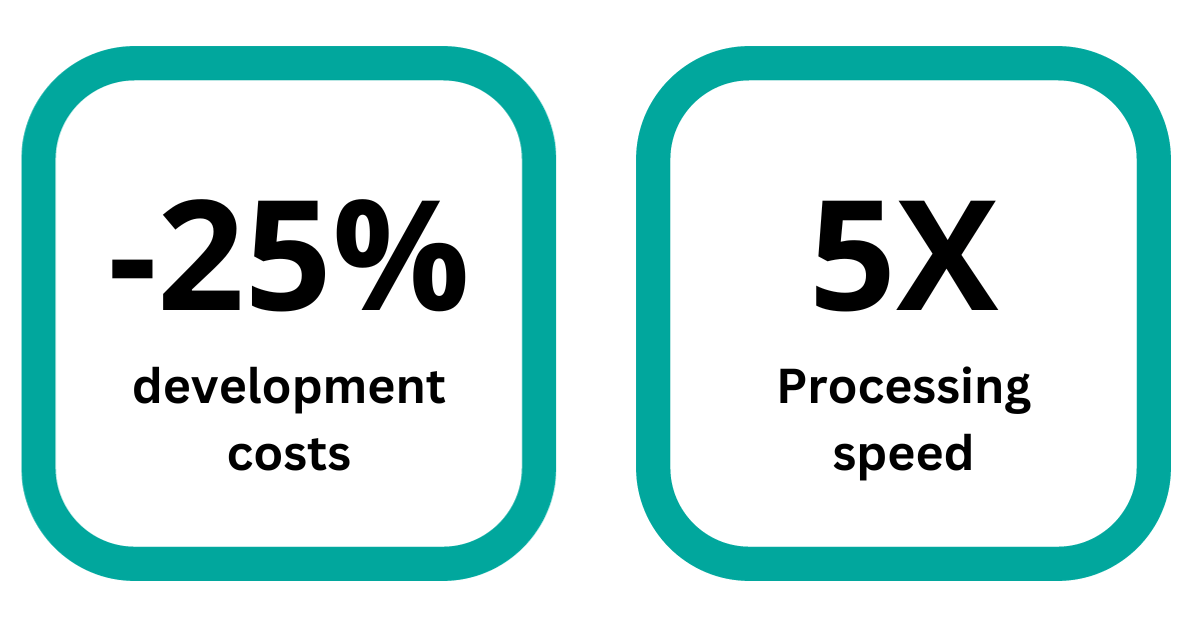

We built our data platform in Microsoft Azure Synapse Analytics. The envisioned platform had to comprise different storage layers, with technical components to increase automation and standardisation, reduce development costs and monitor our data efforts. Our team implemented the architectural framework using Microsoft Azure Databricks on top of Microsoft Azure Data Lake Storage Gen2 to manage its vast volumes of data and support advanced analytics.

Additionally, we relied on Azure SQL DB as a central repository for configuring the data automation framework and Synapse Dedicated SQL pools as a publication layer for its downstream systems. We also deployed Azure Data Factory to map data flows and enable us to build scalable ETLs visually. Simultaneously, we created a self-service end-user zone using Microsoft Power BI and Azure tools like Databricks where business users benefit from the data we prepare to report on.

On top of all these Microsoft tools, we created our own framework consisting of a series of technical services using PySpark. This framework allows for quick and cost-effective data uploading in the lower layers.

Taking our the new data platform for a spin

When we built our target architecture, we took our data platform for a test drive with Hexaware as our trusted co-pilot. We opted for a phased approach, migrating 1 or 2 business lines at a time instead of a Big Bang.

This allowed us to assess the process, test the environment to its fullest extent and uncover pain points, which it did. The pilot project revealed two significant flaws we had to address before moving on to the following business lines.

The first flaw was our testing strategy. We intended to test the new platform's performance, pipelines, and integration by copying data history from our on-premises data platform to our new cloud environment and letting both data platforms run simultaneously. Similar results of both runs would indicate a successful migration from one platform to the other. A deviation would reveal an issue on the new platform.

The test did uncover discrepancies. But while we expected to find the causes on the new platform, we quickly realised that the differences could’ve been caused by a wider array of factors than we anticipated. This made locating and solving the causes of these discrepancies much more laborious and time-consuming.

The second flaw was the data quality in the on-premises environment. Our new data platform is equipped with stringent data quality settings for future data uploads. We didn't anticipate these settings flagging erroneous data sets coming from our on-premises data platform. It prevented the migration of bad data but required much fixing.

Once we overcame these hurdles, we prevented them from occurring when migrating the ensuing business lines. The last business lines will be migrated by March 2024.

Reaping the benefits

The building blocks for future innovation

The most significant benefit is the limitless ways we can innovate to improve our business processes, services, and clients' experiences. By embracing the power of data and cloud technology, we've laid the groundwork to explore these innovations.

For instance, the new data platform will soon allow us to leverage advanced analytics services to facilitate claims processes. Clients could upload photos as evidence of their damages. An algorithm would then compare the damage shown on the visuals to a vast volume of data, assess the cost of the damage and execute a pay-out.

CONCLUSION

The possibilities are endless. But to fully harness our new data platform's potential, we needed to integrate it with an equally future-proof operational infrastructure, build a new architecture and transform our culture. Read about our new technological and cultural foundations in one of our articles below.